GARGI: Gaze-Aware Representative Group Image Selection

With the increase in the number of features and how a photo can be taken, research is always striving to find new ways to engage users in an immersive experience of taking a photo and reliving it as a memory. Burst mode and Live mode are two such features that have come to the surface and are very popularly used. Burst mode, also known as Continuous Shooting Mode (CSM), allows the photographer to capture a series of quick-fire pictures without stopping. With the burst mode enabled on their camera, the user can click numerous pictures by pressing and holding down the camera shutter button. Apple inc. introduced live photos as a feature in iPhone 6s and later models in 2015. It takes a three-second video when the shutter button is pressed by capturing video one and a half seconds before and after the actual shot. This enables the live photo to come to life when tapped and held by the user in photos gallery. Apple allows the user to edit the live photo and select a frame that can become the key photo, which adds too much manual work. Alternatively, the system can also select a key photo. However, it can be noticed that Apple's key photo detection does not consider the subject's gaze, especially in group pictures with more than one human subject in them. One of the critical characteristics to enhance a key photo's aesthetic quality for a live group image is the subject's gaze. Gaze uniformity is a measure of the number of subjects in a group image looking in the same direction over the total number of subjects. Often, it is hard to take a uniformly gazed photo in group settings or social gatherings, especially when multiple camera persons attempt to capture the photo of the same group of people. Some people may look into one camera, but others may eye toward the other. We believe that the captured photo would be aesthetically pleasing if all the subjects looked in the same camera unless explicitly asked otherwise.

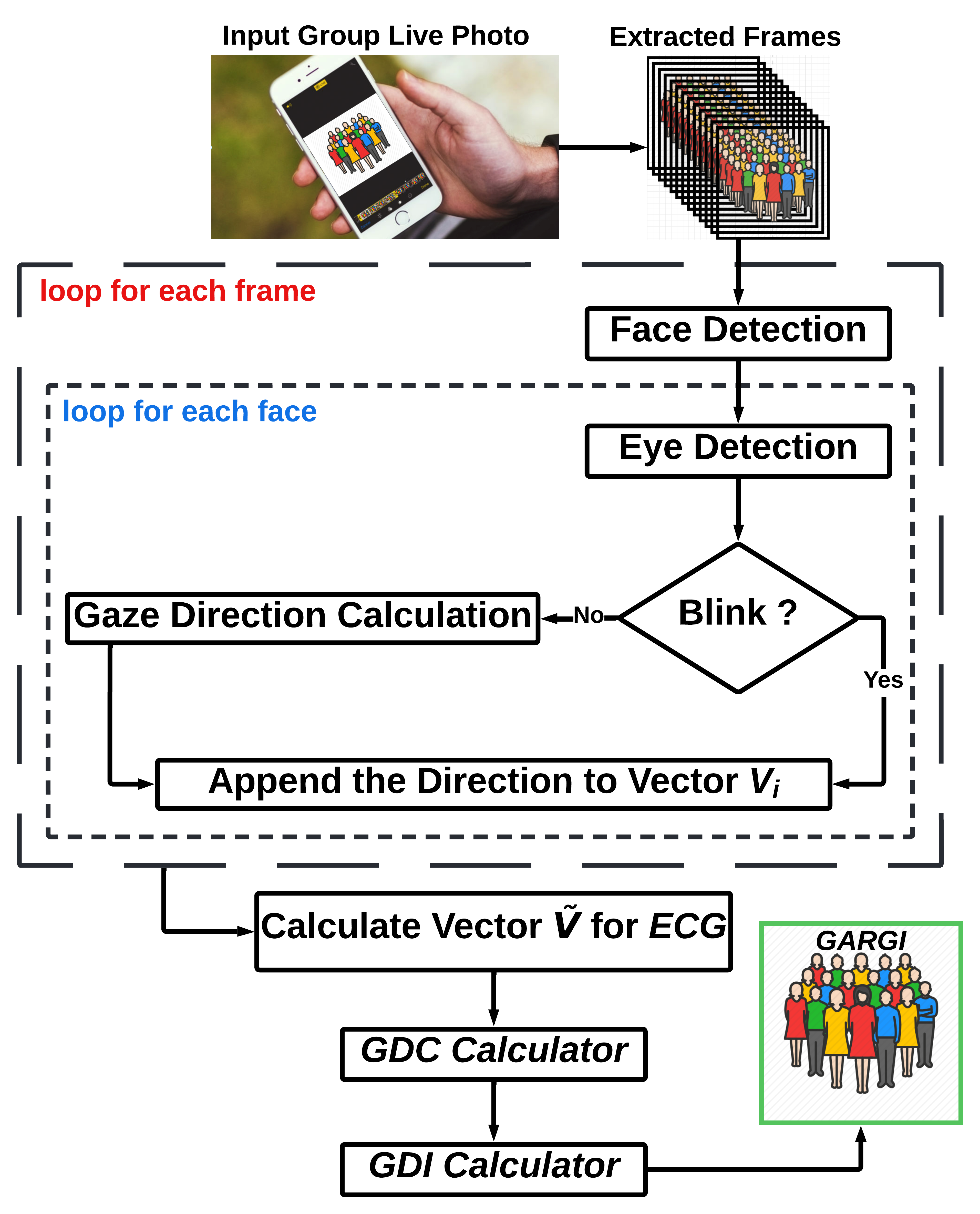

The method starts with a live photo and breaks it down into multiple frames. Then, apply a face detection algorithm to detect all the faces in the group photo over each extracted frame using RetinaFace. Next, we loop over each detected face to isolate the pupils and detect the pupil centers. To isolate the eye frame, we used the Dlib-ml library, which provides sixty-eight facial landmarks and made use of the implementation of pupil detection inspired by YOLO and convolutional neural network to obtain the pupil center coordinates. The isolated eye frame is then passed on to the blink detector. A metric called Eye Aspect Ratio (EAR), which uses facial landmarks and calculates the eyelid's distance from the bottom of the eye, was used. If the EAR falls below a threshold, the eyelid is assumed to be closed, resulting in a blink for that face, and we move on to the next face. If no blink is detected, the eye frame is then passed to the gaze direction calculator.

- O Kulkarni, S Arora and PK Atrey. GARGI: Selecting Gaze-Aware Representative Group Image from a Live Photo, The 5th IEEE International Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, August 2022 (To Appear)

- O Kulkarni, V Patil, SB Parikh, S Arora and PK Atrey. Can You All Look Here? Towards Determining Gaze Uniformity In Group Images, IEEE International Symposium on Multimedia (ISM), Taipei, Taiwan, December 2020